Data Locality in Hadoop

July 28, 2015InputSplit vs Block

August 4, 2015HDFS Block Placement Policy

When a file is uploaded in to HDFS it will be divided in to blocks. HDFS will have to decide where to place these individual blocks in the cluster. HDFS block placement policy dictates a strategy of how and where to place replica blocks in the cluster.

Why Placement Policy Is Important?

Placement policy is important because it will try to keep the cluster balanced so that the blocks are equally distributed across the cluster. At the same time it is important to keep the blocks properly redundant. There is no point in storing all the blocks in one node because that one node will become the single point of failure and that is not ideal.

Hadoop changes the block placement policy between versions and there are several strategies. From Hadoop 0.21.0 the placement strategies are pluggable.

Default Placement Policy

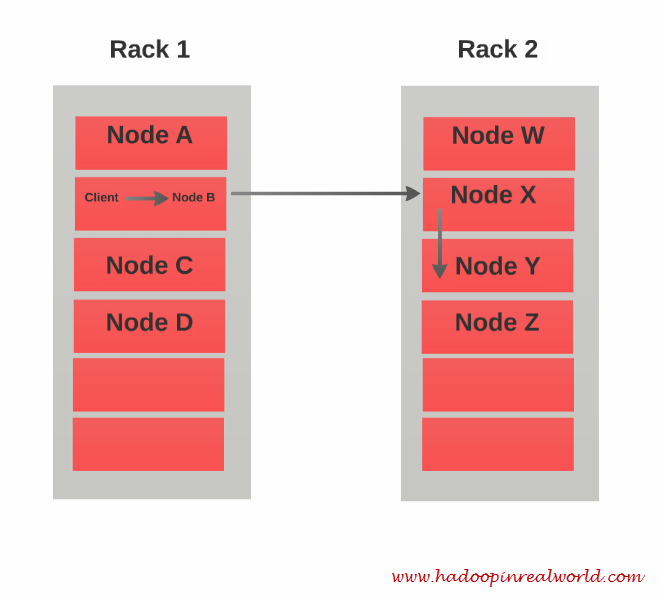

The very first block will be stored on the same node as the client which is trying to upload the file.

The send replica block will be stored on a node in a different rack which is not the same rack where the first block is stored.

The third replica block will be stored on a node in the same rack as the second replica but on a different node.