How to change default block size in HDFS

June 25, 2015NameNode and DataNode

July 12, 2015How to change default replication factor?

What Is Replication Factor?

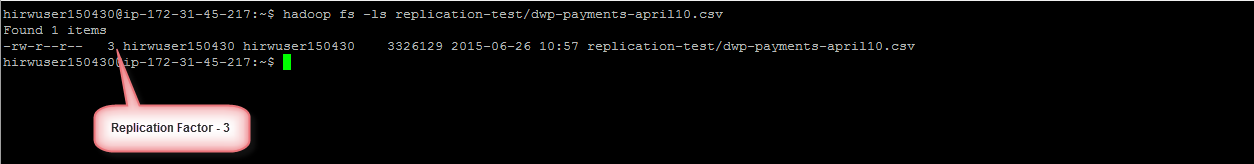

Replication factor dictates how many copies of a block should be kept in your cluster. The replication factor is 3 by default and hence any file you create in HDFS will have a replication factor of 3 and each block from the file will be copied to 3 different nodes in your cluster.

Change Replication Factor – Why?

Let’s say you have a 1 TB dataset and the default replication factor is 3 in your cluster. Which means each block from the dataset will be replicated 3 times in the cluster. Let’s say this 1 TB dataset is not that critical for you, meaning if the dataset is corrupted or lost it would not cause a business impact. In that case you can set the replication factor on just this dataset to 1 leaving the other files or datasets in HDFS untouched.

Lets Try It

Try the commands in our cluster. Click to get get FREE access to the cluster.

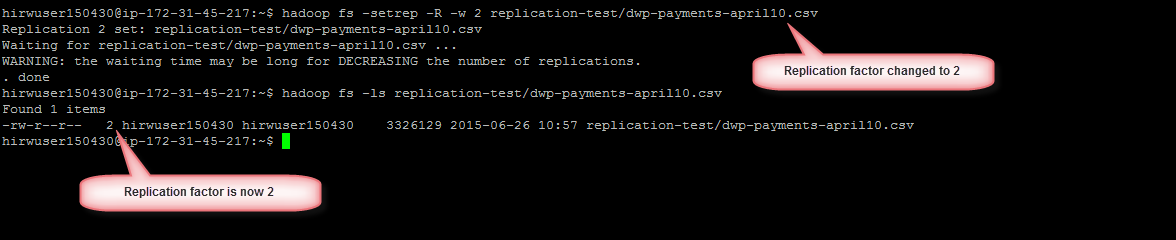

Use the -setrep commnad to change the replication factor for files that already exist in HDFS. -R flag would recursively change the replication factor on all the files under the specified folder

--Create a folder if doesn't exist hadoop fs -mkdir replication-test --Upload a file in to the folder hadoop fs -copyFromLocal /hirw-starterkit/hdfs/commands/dwp-payments-april10.csv replication-test --Check replication hadoop fs -ls replication-test/dwp-payments-april10.csv --Change replication to 2 hadoop fs -setrep -R -w 2 replication-test/dwp-payments-april10.csv

Note that if you are trying to set replication factor to a number higher than the number of nodes, the command will continue to try until it can replicate the desired number of blocks. so it will wait till you add additional nodes to the cluster.

You can also use dfs.replication property to specify the replication factor when you upload files in to HDFS but this will only work on the newly created files but not on the existing files.